The National Transportation Safety Board concluded its first investigation of a fatal crash involving an autonomous test vehicle by issuing several recommendations aimed at tightening the limited oversight of companies that test self-driving cars on public roads.

Among other things, the board called for developers of autonomous vehicles to be required to assess their safety procedures and not test cars on the road until regulators sign off on the document.

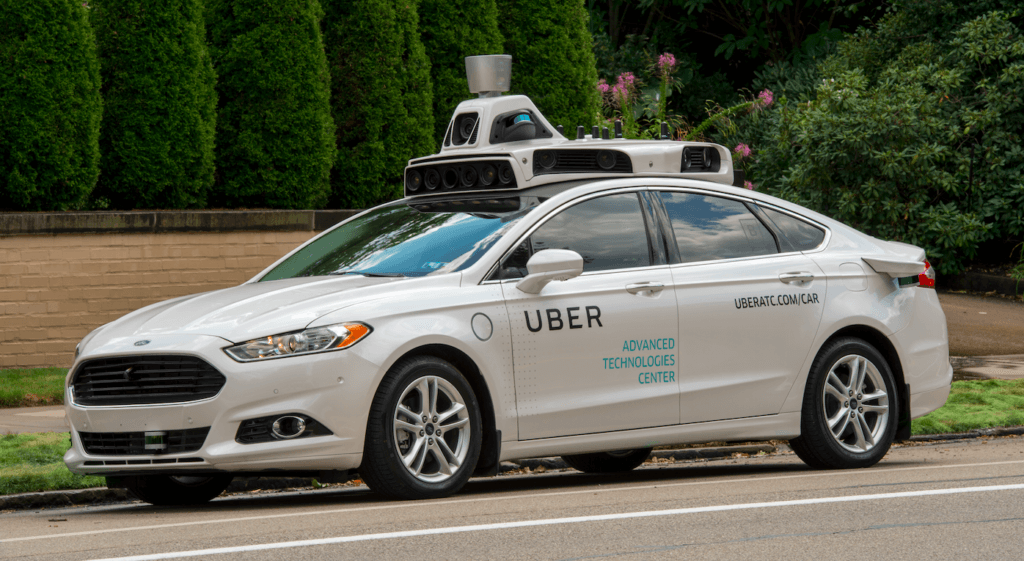

“We feel that we’ve identified certain gaps and these gaps need to be filled, especially when we’re out testing vehicles on public roadways,” NTSB Chairman Robert Sumwalt said after a board meeting on the March 2018 crash involving an Uber Technologies Inc. self-driving test vehicle and a pedestrian.

The case had been closely watched in the emerging autonomous vehicle industry, which has attracted billions of dollars in investment from companies such as General Motors Co. and Alphabet Inc. in an attempt to transform transportation.

“Ultimately, it will be the public that accepts or rejects automated driving systems and the testing of such systems on public roads,” Sumwalt said. “Any company’s crash affects the public confidence. Anybody’s crash is everybody’s crash.”

The NTSB detailed a litany of failings by Uber that contributed to the death of Elaine Herzberg, 49, who was hit by an Uber self-driving SUV as she walked her bicycle across a road at night in Tempe, Arizona.

Uber halted self-driving car tests after the accident. Information released since then highlighted a series of lapses — both technological and human — that the board cited as having contributed to the crash.

Uber resumed self-driving testing late last year in Pittsburgh.

The “immediate cause” of the crash was the backup safety driver’s failure to monitor the road ahead because she was distracted by her mobile device, the board found. A lax safety program at Uber contributed to the accident, the NTSB found.

The National Highway Traffic Safety Administration said it would review the NTSB’s report and recommendations. “While the technology is rapidly developing, it’s important for the public to note that all vehicles on the road today require a fully attentive operator at all times,” the agency said in a statement.

In a statement, Uber said it regrets the fatal crash and is committed to improving the safety of its self-driving program, and implementing the NTSB’s recommendations.

“Over the last 20 months, we have provided the NTSB with complete access to information about our technology and the developments we have made since the crash,” Nat Beuse, head of safety for Uber’s self-driving car operation, said in a statement. “While we are proud of our progress, we will never lose sight of what brought us here or our responsibility to continue raising the bar on safety.”

The Uber vehicle’s radar sensors first observed Herzberg about 5.6 seconds prior to impact before she entered the vehicle’s lane of travel and initially classified her as a vehicle. The self-driving computers changed its classification of her as different types of objects several times and failed to predict that her path would cross the lane of self-driving test SUV, according to the NTSB.

The modified Volvo SUV being tested by Uber wasn’t programmed to recognize and respond to pedestrians walking outside of marked crosswalks, nor did the system allow the vehicle to automatically brake before an imminent collision. The responsibility to avoid accidents fell to the lone safety driver monitoring the vehicle’s automation system. Other companies place a second human in the vehicle for added safety.

The safety driver was streaming a television show on her phone in the moments before the crash, despite company policy prohibiting drivers from using mobile devices, according to police. The NTSB has also said that Uber’s Advanced Technologies Group that was testing self-driving cars on public streets in Tempe didn’t have a standalone safety division, a formal safety plan, standard operating procedures or a manager focused on preventing accidents.

“The inappropriate actions of both the automatic driving system as implemented and the vehicle’s human operator were symptoms of a deeper problem, the ineffective safety culture that existed at the time,” Sumwalt said at the opening of the hearing.

Uber made extensive changes to its self-driving system after several reviews of its operation and findings by NTSB investigators. The board pointed out that Uber had been very cooperative with its inquiry.

The company told the NTSB that the new software would have been able to correctly identify Herzberg and triggered controlled braking to avoid her more than 4 seconds before the original impact, the NTSB has said.

Was this article valuable?

Here are more articles you may enjoy.

Navigators Can’t Parse ‘Additional Insured’ Policy Wording in Georgia Explosion Case

Navigators Can’t Parse ‘Additional Insured’ Policy Wording in Georgia Explosion Case  FM Using AI to Elevate Claims to Deliver More Than Just Cost Savings

FM Using AI to Elevate Claims to Deliver More Than Just Cost Savings  US Will Test Infant Formula to See If Botulism Is Wider Risk

US Will Test Infant Formula to See If Botulism Is Wider Risk  Hackers Hit Sensitive Targets in 37 Nations in Spying Plot

Hackers Hit Sensitive Targets in 37 Nations in Spying Plot